- Kubernets Master:Master节点是kubernets集群的网关和中枢,负责维护集群的健康状态,任务调度,及pod编排和其他节点的通信。Master主要由Api Server,Controller-Manager和 Scheduler,以及存储集群状态的服务Etcd构成(集群的信息存在etcd中,由api server共享给集群的各组件和客户端)。

- Kubernets Slave:Slave节点是Kubernets集群的工作节点,负责接受Master节点的工作指令,并根据指令规则对集群进行创建pod,销毁pod,调整流量规则等指令。

- Kubernets Node: Node节点的核心是Kubelet,Kubelet会在Api Server上注册当前的工作节点,并定期向Master节点汇报节点的资源使用情况。

- Kube-Proxy:每个工作节点都要运行一个kube-proxy守护进程,它能够按需要为每一个Service对象生成iptables或者IPvs规则,从而捕获当前的Cluster IP的流量并将其转发到正确的后端pod对象。

- Pod:在Kubernetes系统中,调度的最小颗粒不是单纯的容器,而是抽象成一个Pod,Pod是一个可以被创建、销毁、调度、管理的最小的部署单元。比如一个或一组容器。

- Service:Service是建立在Pod对象的资源抽象,通过Label选择器选定一组pod对象,并为这组对象定义一个固定的访问入口(一般是一个IP,也称Service IP),若Kubernets集群存在Dns组件,它会为Service创建一个Dns名称,以便客户端进行服务发现。同时将到达Service IP的请求负载均衡到后端的Pod节点,也可以将集群外部的流量引入到集群中来,因此Service对象从本质上讲就是一个四层代理.

- Replication Controllers:Replication Controller是Kubernetes系统中最有用的功能,实现复制多个Pod副本,往往一个应用需要多个Pod来支撑,并且可以保证其复制的副本数,即使副本所调度分配的主宿机出现异常,通过Replication Controller可以保证在其它主宿机启用同等数量的Pod。Replication Controller可以通过repcon模板来创建多个Pod副本,同样也可以直接复制已存在Pod,需要通过Label selector来关联。现已经被ReplicaSet取代。

1,系统准备

|

|

2,添加阿里云仓库

|

|

3,安装docker和k8s包文件

|

|

4,初始化kubernets集群

|

|

5,安装网络插件

|

|

6,创建kubernetes-dashboard证书

|

|

7,安装kubernetes-dashboard

|

|

8,添加授权用户

|

|

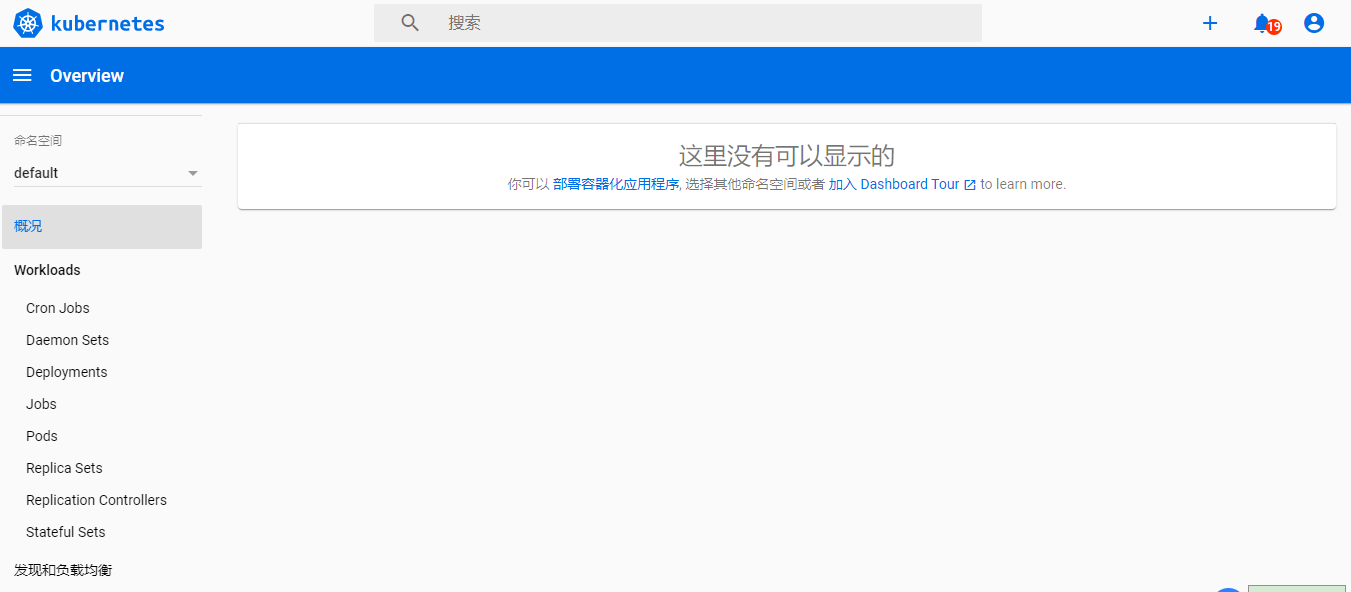

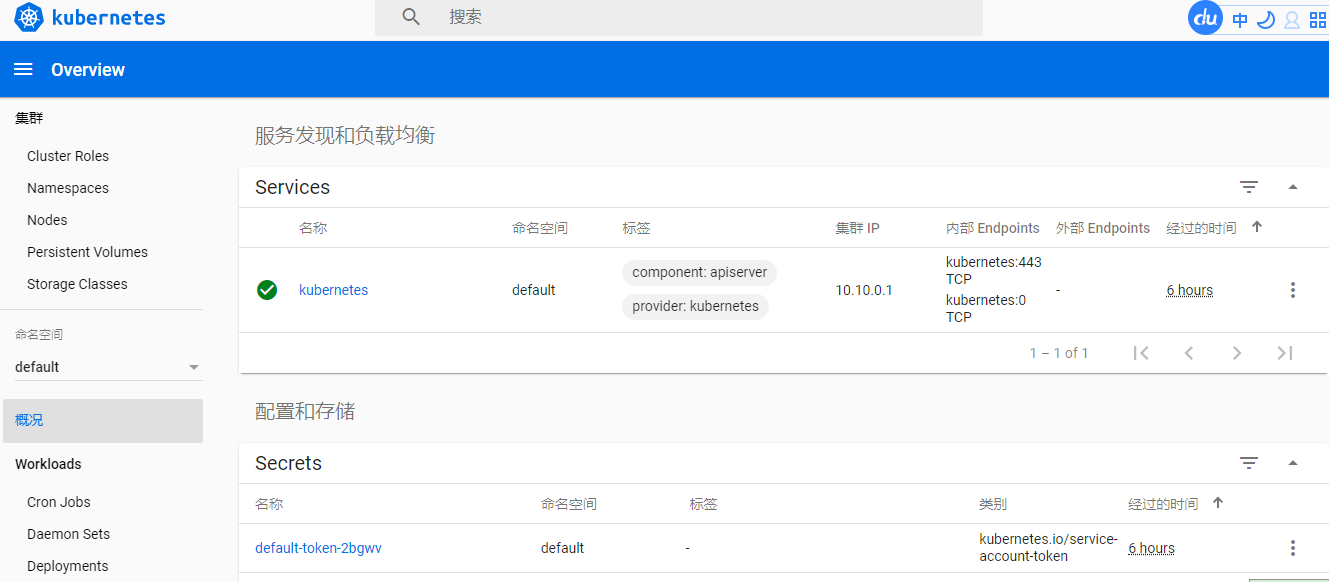

9,安装完验证登录

|

|

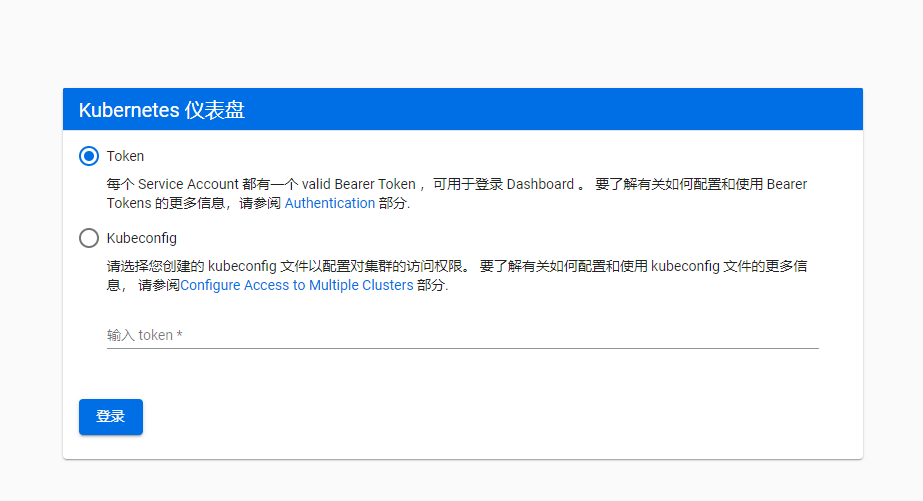

10,token登录

|

|

11,其他异常

|

|